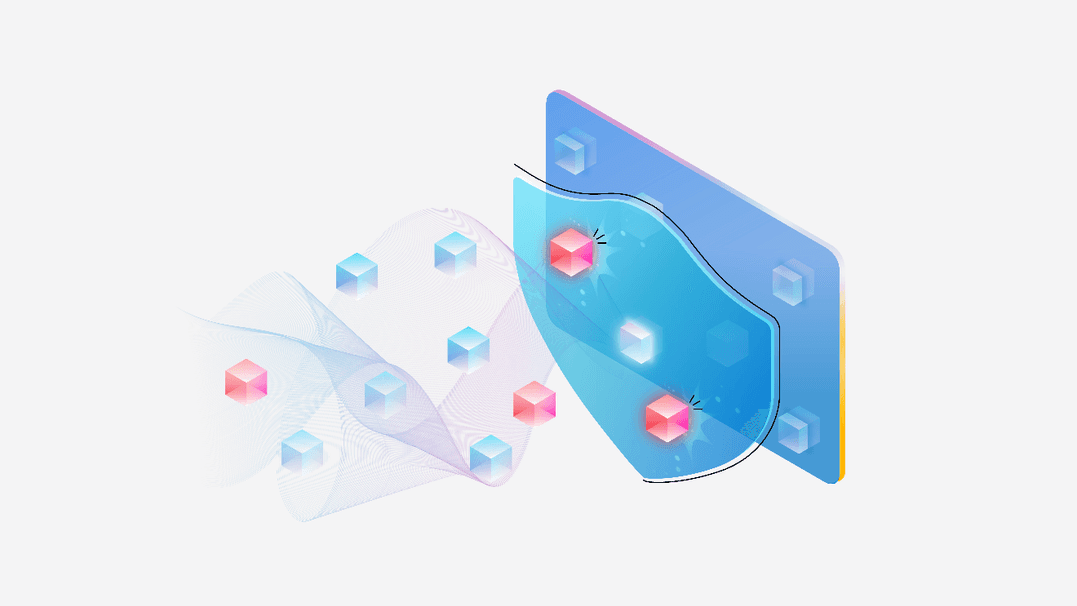

Generative AI models can pose risks of being exploited by malicious actors. To mitigate these risks, we integrate safety mechanisms to restrict the behavior of large language models (LLMs) within a safe operational scope. However, despite these safeguards, LLMs can still be vulnerable to adversarial inputs that bypass the integrated safety protocols.

Prompt Shields is a unified API that analyzes LLM inputs and detects adversarial user input attacks.

Microsoft

Security

AI powered